Docker is an open platform for developing, shipping, and running applications. That is an open-source engine that automates the deployment of applications into containers. It was written by the team at Docker, Inc (formerly dotCloud Inc, an early player in the Platform-as-a-Service (PAAS) market), and released by them under the Apache 2.0 license.

The initial release of Docker was in March 2013 and since then, it has become the buzzword for modern world development, especially in the face of Agile-based projects.

On top of a virtualized container execution environment, Docker adds an application deployment engine. It’s designed to provide a lightweight and fast environment for running your code, as well as an efficient workflow to get that code from your laptop to your test environment and eventually into production. Docker is quite easy to use. Indeed, you can get started with Docker on a minimal host running nothing but a compatible Linux kernel and a Docker binary.

Docker’s mission is to provide:

After we explained what the docker is, we will now mention some features:

Let’s look at the core components that compose the Docker Community Edition:

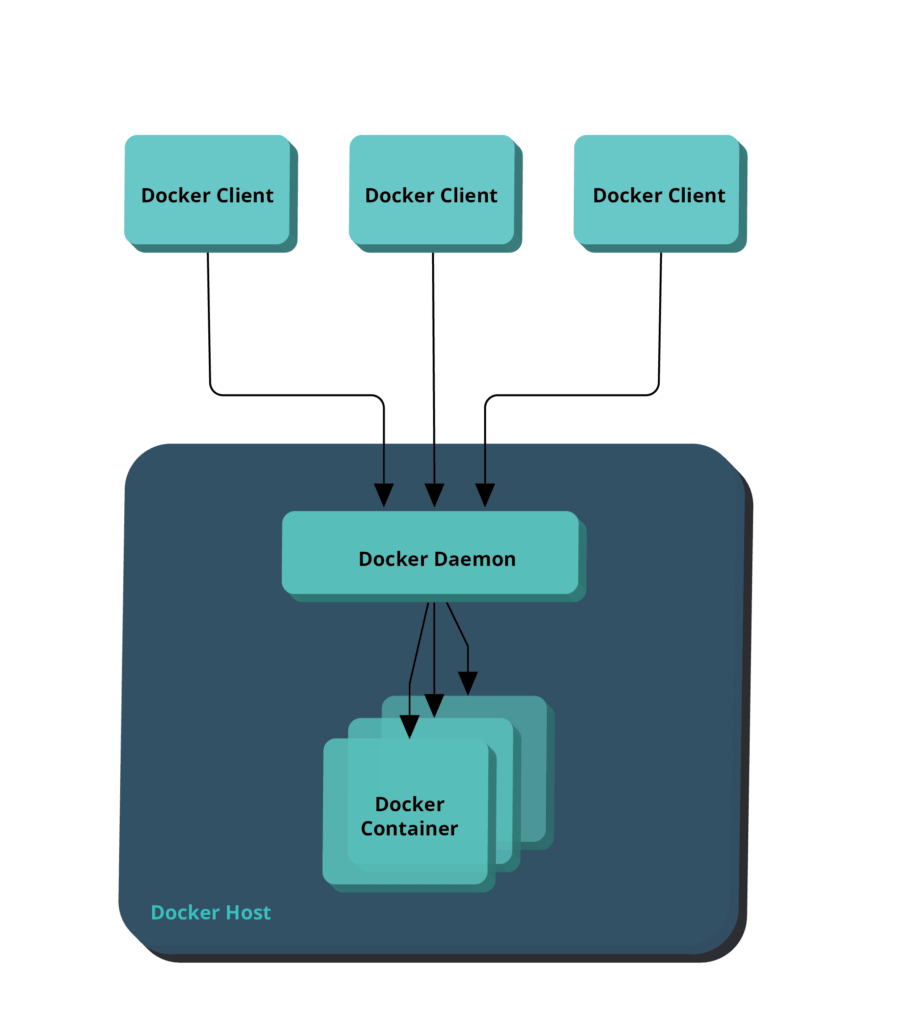

Docker is a client-server application. The Docker client talks to the Docker server or daemon, which, in turn, does all the work. You’ll also sometimes see the Docker daemon called the Docker Engine. Docker ships with a command-line client binary, docker, as well as a full RESTful API to interact with the daemon: dockerd.

You can run the Docker daemon and client on the same host or connect your local Docker client to a remote daemon running on another host. You can see Docker’s architecture depicted here:

Images are the building blocks of the Docker world. You launch your containers from images. Images are the ”build” part of Docker’s life cycle. They are a layered format, using Union file systems, that are built step-by-step using a series of instructions.

For example:

You can consider images to be the ”source code” for your containers. They are highly portable and can be shared, stored, and updated. In the book, we’ll learn how to use existing images as well as build our own images.

Docker stores the images you build in registries. There are two types of registries: public and private. Docker, Inc., operates the public registry for images, called the Docker Hub. You can create an account on the Docker Hub and use it to share and store your own images.

The Docker Hub also contains, at last count, over 10,000 images that other people have built and shared. Want a Docker image for an Nginx web server, the Asterisk open source PABX system, or a MySQL database? All of these are available, along with a whole lot more.

Docker helps you build and deploy containers inside of which you can package your applications and services. As we’ve just learned, containers are launched from images and can contain one or more running processes. You can think about images as the building or packing aspect of Docker and the containers as the running or execution aspect of Docker.

A Docker container is:

Docker borrows the concept of the standard shipping container, used to transport goods globally, as a model for its containers. But instead of shipping goods, Docker containers ship software.

Each container contains a software image — its ’cargo’ — and, like its physical counterpart, allows a set of operations to be performed. For example, it can be created, started, stopped, restarted, and destroyed.

Docker also doesn’t care where you ship your container: you can build on your laptop, upload to a registry, then download to a physical or virtual server, test, deploy to a cluster of a dozen Amazon EC2 hosts, and run. Like a normal shipping container, it is interchangeable, stackable, portable, and as generic as possible.

With Docker, we can quickly build an application server, a message bus, a utility appliance, a CI testbed for an application, or one of a thousand other possible applications, services, and tools. It can build local, self-contained test environments or replicate complex application stacks for production or development purposes. The possible use cases are endless.

So, what’s the big deal about Docker and containers in general? We’ve discussed briefly the isolation that containers provide; as a result, they make excellent sandboxes for a variety of testing purposes. Additionally, because of their ’standard’ nature, they also make excellent building blocks for services. Some of the examples of Docker running out in the wild include:

What is certain is that Docker technology is expanding. In this stage, we explained to you what Docker is basically and what its basic components and characteristics are. In our next blog, you will be able to learn more specifically about dockerfile, commands, and Docker’s technological components.